The new version implements two quick and general methods to compute the EVPPI. These are both "univariate" methods, in the sense that you can compute the separate impact of each parameter in a model on the overall expected value of information. This is not necessarily ideal, because there might be (and in general there is) posterior correlation among the model parameters and thus it would be optimal to consider that in computing the EVPPI.

Of course, one can always perform multivariate EVPPI analysis using the 2-stage MCMC approach (described in BMHE) $-$ but that is very much likely to be computationally intensive. Apparently, a new version of Strong and Oakley's paper is under preparation to deal with multivariate EVPPIs.

Here's an example of how to use the new code, from R $-$ when I've actually uploaded it, that is...

library(BCEA)

data(Vaccine)

ls()

[1] "c" "cost.GP" "cost.hosp" "cost.otc"

[5] "cost.time.off" "cost.time.vac" "cost.travel" "cost.trt1"

[9] "cost.trt2" "cost.vac" "e" "MCMCvaccine"

[13] "N" "N.outcomes" "N.resources" "QALYs.adv"

[17] "QALYs.death" "QALYs.hosp" "QALYs.inf" "QALYs.pne"

[21] "treats"

input <- CreateInputs(MCMCvaccine)

x.so <- evppi("xi",input$mat,m,n.blocks=20) # Strong & Oakley method

x.sal <- evppi("xi",input$mat,m,n.seps=2) # Sadatsafavi et al method

Of course, you load the package and then the dataset which comes with it. It includes the variables needed to run the health economic analysis, which is done by calling the function bcea. So far, nothing new (as far as BCEA is concerned).

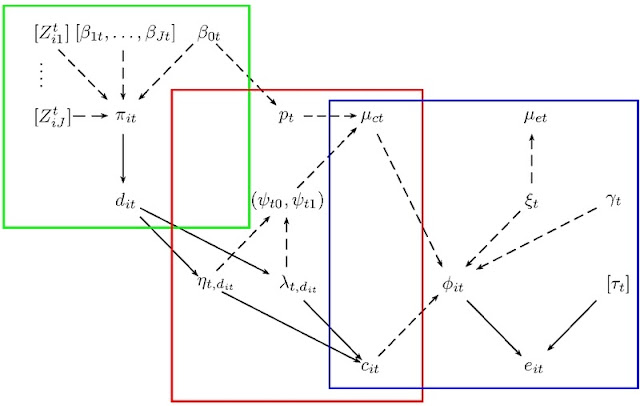

The new bits involves a call to the function CreateInputs, which takes as input an object in the class rjags or bugs; this is basically the result of the MCMC model that produces the posterior simulations for the variables of interest (in the current case, that's essentially e and c). The output of CreateInputs is a list including the string vector of available parameters that can be used for the EVPPI analysis and a matrix with the simulations for all of them (each column is a parameter).

Finally, you can compute the EVPPI for one or more parameters calling the function evppi (note that the first argument of the function can be a vector including more than one parameter; in this case, the univariate analysis will be repeated for each of them separately). The other arguments are the matrix of simulations, the BCEA object including the basic health economic analysis for the current model and a specification of the method chosen.

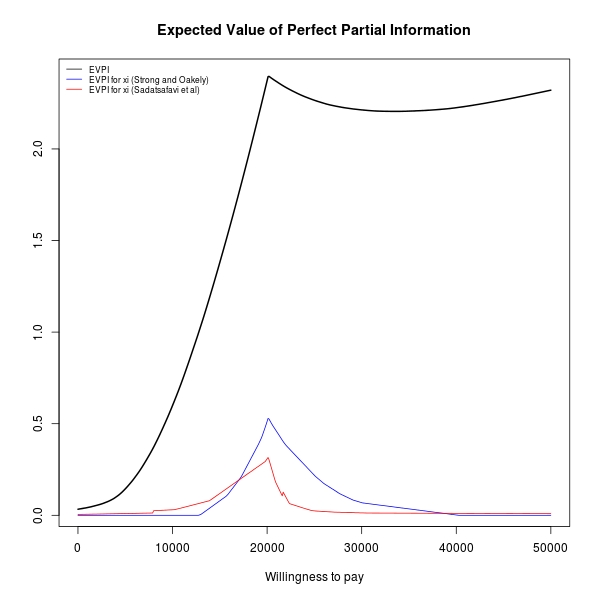

The results can be visualised using the specific method plot for the class evppi and show the overall EVPI with the EVPPI for the selected parameter(s).

The two methods (neither of which estimates the EVPPI without bias, although they are proved to do a good job!) generally agree $-$ although there is some fiddling to do with their parameters (for example the number of blocks in which the matrix of parameters simulations and utilities is decomposed in Strong & Oakley's method).